Neuromorphic Computers: What will they Change?

Nayef Al-Rodhan argues that we need to consider the implications of computing power that can compete with humans.

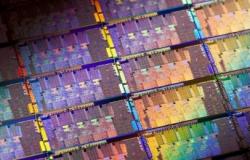

Computing power has reached a level that would have been difficult to predict just a decade ago by most engineers and computer scientists. The incredible advance of artificial intelligence has evolved simultaneously with a general tendency toward miniaturization. These advances can be simply yet powerfully exemplified by the fact that many devices for personal or domestic use, from smart phones to toasters, have more computing power than the Apollo Guidance Computer, which put a man on the moon.

Computers nowadays are able to perform incredibly complex functions. Scientists are even working to embed a moral sense in robots, which would enable them to distinguish right from wrong and allow them to contemplate the moral consequences of their actions. Nevertheless, as intelligent as modern robots are, unmanned systems still continue to appear ‘dumb’ in comparison to their human counterparts.

To challenge this asymmetry of capabilities, a new generation of scientists is working to create a brain-inspired computer, which would imitate the brain’s extraordinary ability to grasp the world. This is expected to be the next breakthrough in computing power, and would usher in a new era in terms of intelligent machinery.

Brain vs. computer

The human brain has a series of unique characteristics that cannot yet be replicated even by the most advanced computer. At the same time, one can argue that computers surpass humans in many ways: they are fast, reliable, unbiased and have high endurance – they do not get tired or bored. Humans, on the other hand, are biased, easily fatigued, and are not perfect decision-makers—they can be inconsistent and prone to make errors. Nevertheless, even as we consider these weaknesses, the human being presents a set of features absent from the most advanced artificial intelligence systems. Humans have common sense, they can think “outside of the box”, and are good at learning; humans are able to detect trends and patterns, they are able to be creative and imaginative.

The research available to date shows that a typical adult human brain normally runs on about 20 watts. The human brain uses much less energy than a computer, yet it is infinitely more complex and intricate. Brains and computers work differently, too. Computers are linear and rely on a basic structure known as the “von Neumann architecture”, which has its origins in the work of 1940s physicist John von Neumann. This architecture shuttles data back and forth between the central processor and memory chips in a linear sequence of calculations.

By contrast, the brain is fully interconnected, with each neuron connected to thousands of others. In the brain, logic and memory are linked with a density and complexity computers cannot match. The average human brain contains from about 86 billion up to 100 billion neurons, and each neuron is within two to three connections of the others via countless potential routes. Each neuron may be passing signals to others via as many as 1,000 trillion synaptic connections.

Computers have benefited from incremental miniaturization over the past decades but have still not overcome critical limitations. This means, for instance, that although computing power has evolved dramatically, today’s microprocessors are still four orders of magnitude hotter than the brain (in terms of power).

Conventional computers contain very complex and powerful chips that allow them to perform precise calculations at high speeds. Brains, on the other hand, are slower but can deal with vague, unpredictable data and imprecise information. In a computer, the central processor is able to spin through a list of instructions and execute very precisely written programmes, but while it is able to crunch numbers, it is hardly able to make sense of inputs such as image or sound. So far, adding extra intelligent features has proven to be a difficult and highly laborious task. This was demonstrated by Google software, showcased in 2012, which required 16,000 processors simply to learn to recognize cats.

The dawn of neuromorphic computing

The contrast between the human brain and computers reveals the fundamental capabilities and advantages that humans have over computers, which work in an orderly manner and are pre-programmed for tasks. Such shortcomings are addressed by scientists who work on developing the brain-inspired computer.

Neuromorphic technology aims to mimic the neural network architecture of the brain. The origins of the technology date back to the late 1980s and the works of Carver Mead, considered the father of neuromorphic computing. In recent years, there has been renewed interest in neuromorphic engineering in universities and private companies. The foundational premise of the brain-like chip is to replicate the morphology of individual neurons and to build artificial neural systems. The ultimate goal is to create a computer which replicates some of the fundamental characteristics of the human brain.

While the neuroscientific study of the human brain is nowhere near finalized, interdisciplinary teams of scientists in Europe and the United States are hard at work in their attempts to realize the brain-computer analogy. Although neuroscience has yet to grasp fully (if this will ever be possible) all the intricacies of the human brain, neuromorphic engineers are aiming to design a computer which presents three of the characteristics of the brain that are known to date: lower power consumption (human brains use less energy but are nevertheless immensely complex), fault intolerance (brains lose neurons and are still able to function, whereas microprocessors can be affected by the loss of one transistor) and no need to be programmed (unlike computers, brains are able to learn and respond spontaneously to signals from the environment).

Today there are several projects and approaches to neuromorphic technology, among which the best known is the TrueNorth chip developed at the IBM. In 2011, the company revealed this brain-like chip which was built departing from the hypothesis that the cerebral cortex comprises certain cortical microcircuits. IBM’s neurosynaptic core integrated both computation and memory. The chip reportedly works like the human brain: it does not have one large central processing units but a huge number of artificial neurons, which are interconnected.

The sheer technical achievements of the chip are impressive: TrueNorth is packed with 4,096 processor cores and is able to mimic one million human neurons and 256 million programmable synapses. Essentially, the chip can encode data in the form of patterns or pulses, just like (as neuroscientists believe) the way the brain stores information. Significantly, the chip eschews the fundamental von Neumann architecture as it does not separate data-storage and data-crunching.

Research in neuromorphic computing has been conducted in Europe as well, particularly under the auspices of the Human Brain Project and programmes like SpiNNaker, which purports to simulate neural networks in real time. Arguably, all these programmes at still in early stages of development and full-fledged incorporation of the technology might take longer than expected. Nevertheless, research and development are advancing at a promising speed. For example, SpiNNaker started in 2006, and from a version with only 18 processors, the team advanced to work on a 1 million processor machine. Utlimately, they hoped to be able to model about 1% of the human brain in real time.

What will it change? Implications for International Relations, Security and Humanity

The impact of neuromorphic computing will be dramatic in several ways.

The applications and implications of this new generation of computers could certainly help neuroscientists fill gaps in their understanding of the brain. Moreover, if and when they reach an operational stage, neuromorphic computers could fundamentally change our interaction with intelligent machines.

Some applications will include neuromorphic sensors in smartphones, smart cars and robots or olfactory detection. The uses of the technology could potentially be countless and, as stated by Dharmendra Modha, the lead developer at IBM, neuromorphic chips such as TrueNorth represent “a direction and not a destination”. The technology could integrate brain-like capabilities in devices and machines that are currently limited in speed and power. In effect, this could be the final step towards creating cognitive computing and systems that are able to learn, remember, reason or help humans make better decisions.

Neuromorphic technology could impact anything from consumer electronics to warfare. These developments will ultimately have implications on national power and the capabilities that define it. In today’s complex world, traditional geopolitical thinking must be complemented by a broader view of the multifaceted elements that define power, beyond the traditional considerations of territory, resources and military might. In a previous work I suggested a theoretical framework of Meta-geopolitics which accounts for seven state capabilities, all of which will be affected by neuromorphic computing:

1. societal and health issues – brain-like computers could be used to help improve therapeutic procedures, impact medical imaging, or help create artificial retina; they could also be used to enhance human potential beyond the average healthy state and, in the process, create massive social disruptions;

2. domestic politics – for example, neuromorphic computers could change (and enhance) surveillance. The technology could be employed to develop “intelligent cameras,” equipped with real-time video analysis capability;

3. economy – neuromorphic computers could impact financial markets, financial forecasting, or the monitoring of agriculture, and alternatively, they could render certain jobs performed by humans redundant;

4. environment – neuromorphic computers could dramatically improve data mining and pattern recognition systems to monitor certain biosphere processes;

5. science and human potential – neuromorphic computers could help create self-learning machines and assist humans in scientific research and job performance. However, conversely, they could accentuate social problems as the very notion of human potential would become less relevant;

6. military and security issues – drones equipped with a brain-like chip would be able to learn to recognize sites and objects previously visited and detect subsequent changes in the environment. Neuromorphic chips could be embedded in robots sent into combat zones, where they would be left to act and decide their course of action independently.

7. international diplomacy – neuromorphic computing could improve communication or risk analysis, but it could also give distinctly greater advantages to some countries which will benefit from more sophisticated intelligence than others.

These are only a few of the potential applications of the technology. In the future, neuromorphic computing will be revolutionary in scope, size, architecture, scalability, design and efficiency, but there remains significant scepticism with regards to its ultimate realization. From a technical standpoint, it remains unclear how chips like TrueNorth can perform when faced with large-scale state-of-the-art challenges, such as the recognition or detection of large objects. Furthermore, the capacity to learn, which would clearly distinguish existing computing paradigms from neuromorphic technology, is not fully verifiable at this point and many doubt that learning can happen on the chip.

Subsequently, a fair amount of caution should be raised in the realms of ethics. Larger existential questions emerge from such advanced artificial intelligence systems. While it is clear that the technology is rather rudimentary for now, its full development could lead to implications that are irreversibly destructive. Discussions of the relations between humans and robots/artificial intelligence started to gain terrain as early as the 1970s and they remain relevant. Nevertheless, today we are confronted more directly with some of the critical questions raised by these developments.

We need to consider with due responsibility the implications of developing machines that offer direct competition with humans. Even if no immediate catastrophes were to occur, such systems can redefine our relations with each other, our sense of self-worth, and the defining traits of our humanity.

Nayef Al-Rodhan (@SustainHistory) is an Honorary Fellow at St Antony`s College, University of Oxford, and Senior Fellow and Head of the Geopolitics and Global Futures Programme at the Geneva Centre for Security Policy. Author of The Politics of Emerging Strategic Technologies. Implications for Geopolitics, Human Enhancement and Human Destiny (New York: Palgrave Macmillan, 2011).

Photo credit: IntelFreePress via Foter.com / CC BY-SA